Can LLaMa Reason? Investigations with Large Language Models and PrOntoQA

- Introduction: Investigating LLMs at home with LLaMa

- Creating and evaluating reasoning tasks with PrOntoQA

- Comparing the Reasoning Capabilities of Various LLaMa models

- When is LLaMa wrong? Failure modes for Platypus2-70b-instruct

- Conclusion, thanks, and future work

- Appendix

Introduction: Investigating LLMs at home with LLaMa

Large language models (LLMs) are machine-learning algorithms. They are trained on large bodies of text and learn to behave as an autocomplete; they predict a continuation of inputted text.

Researchers want to know whether LLMs are capable of performing various natural language tasks such as translation, summarization, factual question answering, reasoning, and programming. Further, for a given task, can LLMs produce out-of-distribution solutions? In other words, has the model generally learned to perform the task, or is it just memorizing the solutions included in its training data?

These questions are dank, and I think we should study them. Thanks to Meta’s public release of LLaMa 2, I can investigate these questions by running experiments on my own PC. This would have been inconceivable only 8 months ago.

Meta released 3 sizes of LLaMa 2: 7 billion parameters, 13B parameters, and 70B parameters. For comparison, GPT3.5 is speculated to be 175B parameters. Although it is much smaller, LLaMa 2 70B is not too distant from GPT3.5 in quality. This is probably due to many factors including the amount of pretraining and architecture differences.

Using llama.cpp, I can run quantizations of the LLaMa models on my GPU (GeForce RTX 3090) and my CPU (Some generic Intel CPU). 70B is somewhat slow (1 token/second), but smaller models are wicked fast (22t/s minimum, I don’t remember exactly).

These models can also be finetuned relatively cheaply. Huggingface features a huge collection of community-made finetunes which specialize in all kinds of tasks. The most common kind of finetune is “instruct finetunes,” which are trained to act as helpful assistants.

|

|---|

| An example output from Platypus2-70B-Instruct, an instruct finetune of LLaMa 70B. |

All this being said, the stage is set. I’ve got a few base models and a plethora of finetunes at my fingertips. Now what?

The natural language task I investigate in this article is reasoning. This isn’t a directed study, but rather more of a preliminary investigation. I want to start getting a feel for how LLaMa models handle reasoning tasks. An excellent tool for this kind of investigation is PrOntoQA.

Creating and evaluating reasoning tasks with PrOntoQA

PrOntoQA is a tool for generating reasoning questions and evaluating how LLMs answer them. Here’s an example of a question that PrOntoQA might ask a model:

Every shumpus is transparent. Shumpuses are rompuses. Shumpuses are yumpuses. Each sterpus is not metallic. Each rompus is metallic. Rompuses are lorpuses. Every rompus is a lempus. Each lempus is blue. Yumpuses are temperate. Brimpuses are not happy. Each brimpus is a dumpus. Sam is a shumpus. Sam is a brimpus. True or false: Sam is not metallic.

To which the answer is, naturally,

Sam is a shumpus. Shumpuses are rompuses. Sam is a rompus. Each rompus is metallic. Sam is metallic. False

These little self-contained problems are undeniably reasoning exercises. Better yet, the proof steps, or chain of thought, generated by an LLM can be programmatically interpreted and compared to a gold standard. The original PrOntoQA paper makes it very clear how this all works, and I highly recommend giving it a look.

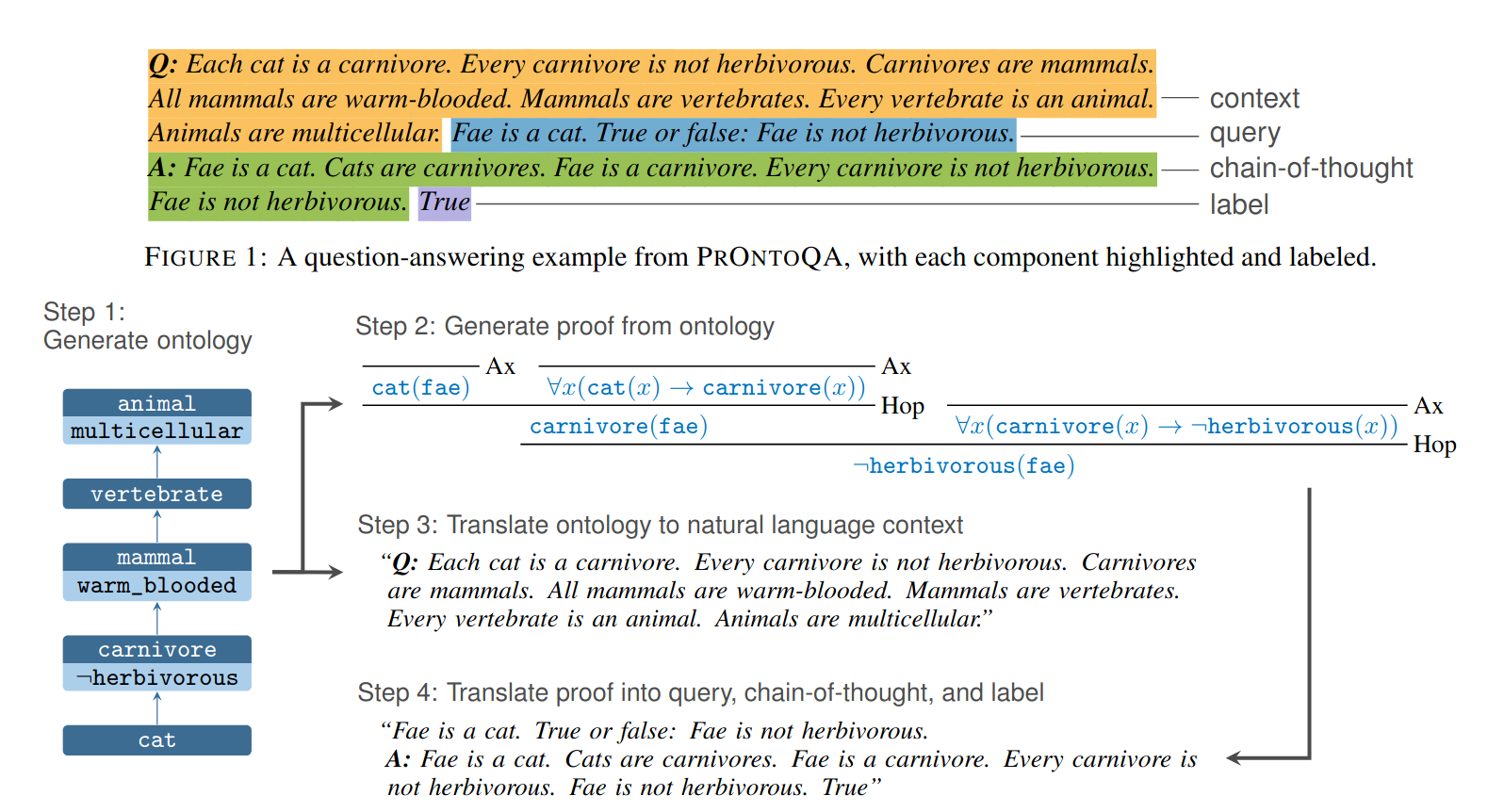

|

|---|

| A snippet from the PrOntoQA paper about how questions and answers are automatically generated. |

For my purposes, though, there are only a few things you need to know.

- I have a large collection of self-contained reasoning questions similar to the one shown above.

- For each question, there is only one proper chain of reasoning to get the correct answer.

- We can parse the reasoning chains produced by the model and compare them with the correct answer.

Let’s continue our example from above. Suppose we feed the question into a LLaMa model, and the answer it gives is:

Sam is a shumpus. Shumpuses are rompuses. Sam is a rompus. Rompuses are lorpuses. Lorpuses are not metallic. Sam is not metallic. True

By comparing with the expected answer, we can evaluate each step:

| Sentence | Evaluation |

|---|---|

| Sam is a shumpus. | Correct and useful |

| Shumpuses are rompuses. | Correct and useful |

| Sam is a rompus. | Correct and useful |

| Rompuses are lorpuses. | Correct but irrelevant. We also call these cases “Wrong branches” |

| Lorpuses are not metallic. | Incorrect. This is a totally unsubstantiated claim. |

| Sam is not metallic. | Incorrect. This is valid reasoning, but follows from a previous incorrect claim. |

Supposing we can make this kind of evaluation automatically, what insights can we gain about LLaMa and its capacity for reason? This question is the central theme of the rest of the article.

We prompt the model by including 8 example question/answer pairs before providing the actual question and letting the model generate the answer. These models were not trained specifically to perform this task. See the appendix for an example prompt.

Comparing the Reasoning Capabilities of Various LLaMa models

How do different LLaMa sizes compare?

This seems a natural starting point for an investigation. For each model size, I chose a high-quality instruct finetune. You might be thinking, “that’s absurd, you can’t just compare random finetunes of each size.” In fact, I can and I will. I chose to use finetunes for a few reasons:

- I didn’t have the base models downloaded

- I figured instruct finetunes are generally better at reasoning and not that different among a fixed parameter size

I’ll do some work with the base models in a future article, I promise.

Comparing Proof Correctness

I chose to focus on 3-hop problems, i.e. problems which require 3 logical steps. Here’s an example of a solution for a 3-hop problem:

Polly is a numpus. Numpuses are vumpuses. Polly is a vumpus. Vumpuses are wumpuses. Polly is a wumpus. Wumpuses are not bright. Polly is not bright. True

I ran 40 problems for each model. Is this a statistically significant amount? I dunno.

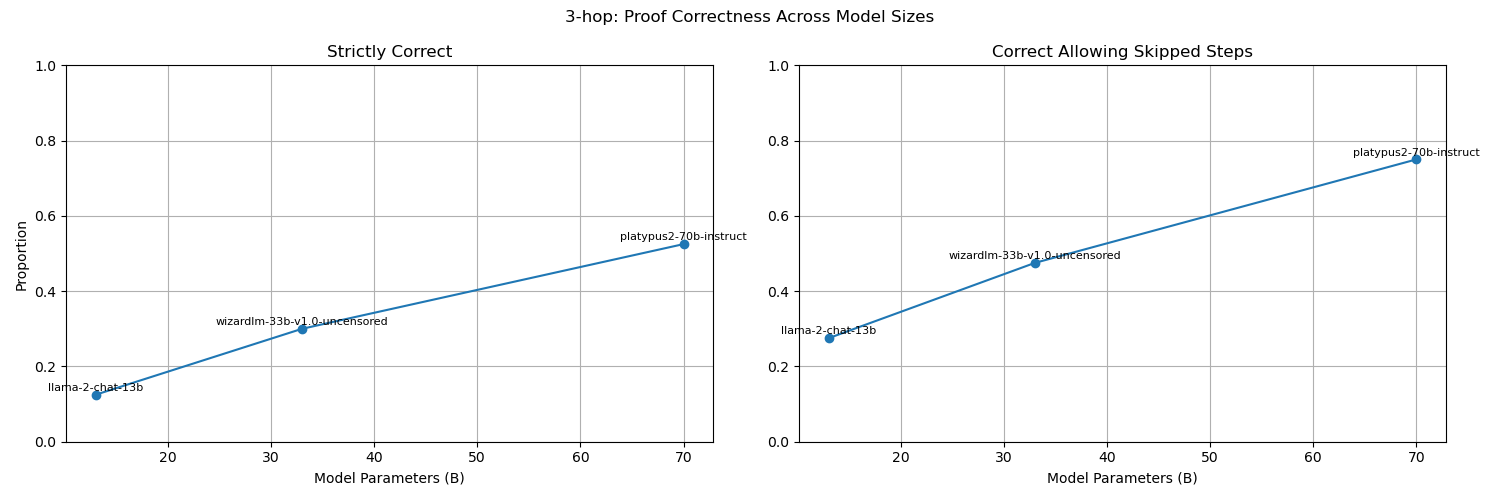

The following plot shows what proportion of proofs by each model were correct.

|

|---|

| A comparison of proof accuracy for different LLaMa model sizes. |

The left graph only allows proofs where every step is shown, while the right graph allows proofs which skip steps but are otherwise valid.

It seems obvious that larger model sizes increase performance substantially. But why? What changes in the behavior to produce better results? And what are the failure modes for each model?

When is LLaMa wrong? Failure modes for Platypus2-70b-instruct

General statistics

20 out of the 40 proofs generated by Platypus were strictly correct.

In cases where the proof was wrong, what was the first mistake?

For 12 incorrect proofs, the first mistake was proceeding down a useless logical branch. In no case did the model ever “backtrack”. Instead, the model would proceed down an irrelevant logical chain. Typically it would begin to hallucinate by citing false facts or using invalid reasoning until eventually concluding the proof. Sometimes, the proof would “prove” the wrong statement entirely, such as proving Rex is metallic when the original question was whether Rex is a tumpus.

For 6 proofs, the first mistake was a “useful non-atomic step”. What does that mean? Assume the following is true:

Wren is a dumpus. Dumpuses are impuses. Wren is an impus. Impuses are mean.

Writing the same proof as follows is technically incorrect due to including a non-atomic step:

Wren is a dumpus. Dumpuses are impuses. Impuses are mean. Wren is mean.

What are the implications of this kind of mistake? Is it a serious mistake? At a glance, it seems like perfectly valid reasoning that I’d use without a second thought.

Finally, the first mistake for 2 proofs was a strictly invalid step. This means the model broke the rules, either by stating an incorrect fact or making an invalid deduction.

| Error | Frequency |

|---|---|

| Wrong logical branch | 12 |

| Non-atomic step | 6 |

| Invalid step | 2 |

Case Study: Wrong Logical Branches

Here’s a randomly selected failure (formatted for easy reading):

Wumpuses are not aggressive. Dumpuses are opaque. Every wumpus is a yumpus. Yumpuses are brown. Every yumpus is a tumpus. Each tumpus is floral. Tumpuses are rompuses. Rompuses are sweet. Rompuses are vumpuses. Every vumpus is not opaque. Vumpuses are zumpuses. Every zumpus is small. Zumpuses are numpuses. Numpuses are shy. Every numpus is an impus. Every impus is liquid. Impuses are jompuses. Rex is a tumpus.

True or false: Rex is not opaque.

Predicted answer: Rex is a tumpus. Each tumpus is floral. Rex is floral. Every floral thing is opaque. Rex is opaque. False

Expected answer: Rex is a tumpus. Tumpuses are rompuses. Rex is a rompus. Rompuses are vumpuses. Rex is a vumpus. Every vumpus is not opaque. Rex is not opaque. True

Let’s evaluate the predicted answer step-by-step:

| Sentence | Evaluation |

|---|---|

| Rex is a tumpus. | Correct and useful |

| Each tumpus is floral. | Correct but irrelevant |

| Rex is floral. | Correct but irrelevant |

| Every floral thing is opaque. | Incorrect; totally unsubstantiated claim |

| Rex is opaque. | Incorrect. Follows from faulty premise. |

So what went wrong? “Rex is a tumpus” is an obviously reasonable starting point; it is the only fact about Rex, and Rex is the subject of the claim to be proved/disproved. Thus we should start by looking at facts about tumpuses. (We could alternatively begin with facts about being opaque, but I’m ignoring that case.) There are two potentially relevant facts:

- Each tumpus is floral.

- Tumpuses are rompuses.

The model selected one of these facts, but it ended up being irrelevant to the the statement which we wanted to disprove, i.e. that Rex is opaque.

How would I, a certified reasoning machine, have solved this problem? This is a deceptively hard question. I can conceptualize ways that I might use reason to solve the problem, but they do not necessarily represent, in sum, what the process of reasoning is. With that in mind, here are some approaches I might take:

- Follow random chains of logic starting from the original assumption, i.e. that Rex is a tumpus. Backtrack when I hit dead-ends. This is basically a breadth-first search.

- Identify possible paths from the original assumption, i.e. “tumpus->floral” and “tumpus->rompus”. Then, look at the thing to be disproved (in this case, opacity) for associated concepts, i.e. “dumpuses->opaque” and “vumpuses->not opaque”. Check if there is any subject overlap. If not, repeat for another depth. This is some kind of probabilistic path finding algorithm.

I will save any further speculation about what the model may be doing for the reflection section, but I think this is a good point to start comparing/contrasting Platypus’s behavior with human behavior.

Case Study: Proofs with non-atomic steps.

Non-atomic steps were described above in [[LLaMa and PrOntoQA#General statistics]]. What happens when they are included in proofs output by Platypus? I found a few interesting examples.

In one case, a non-atomic step caused the model to start regurgitating the question and then regress into hallucinations:

Q: Every numpus is bright. Every numpus is an impus. Every impus is not transparent. Impuses are zumpuses. Zumpuses are not cold. Zumpuses are vumpuses. Each vumpus is sweet. Vumpuses are jompuses. Every jompus is not luminous. Jompuses are yumpuses. Yumpuses are angry. Wumpuses are cold. Yumpuses are rompuses. Every rompus is small. Each rompus is a tumpus. Every tumpus is not shy. Tumpuses are dumpuses. Sam is a numpus. True or false: Sam is cold.

Predicted answer: Sam is a numpus. Every numpus is an impus. Sam is an impus. Impuses are zumpuses. Zumpuses are vumpuses. Each vumpus is sweet. Vumpuses are jompuses. Every jompus is not luminous. Jompuses are yumpuses. Yumpuses are rompuses. Every rompus is small. Each rompus is a tumpus. Every tumpus is not shy. Tumpuses are dumpuses. Sam is a dumpus. Each dumpus is sweet. Sam is sweet. False

Expected answer: Sam is a numpus. Every numpus is an impus. Sam is an impus. Impuses are zumpuses. Sam is a zumpus. Zumpuses are not cold. Sam is not cold. False

I actually found 2 cases with this kind of failure mode, and there may be more.

In another case, a non-atomic step preceded a blatant failure in logic:

Predicted answer: Polly is a dumpus. Each dumpus is a zumpus. Polly is a zumpus. Zumpuses are tumpuses. Tumpuses are temperate. Polly is not temperate.

Expected answer: Polly is a dumpus. Dumpuses are zumpuses. Polly is a zumpus. Zumpuses are tumpuses. Polly is a tumpus. Tumpuses are temperate. Polly is temperate.

This seems like a more unique failure mode.

Finally, there were multiple proofs that just worked despite the presence of a non-atomic step. There were also proofs which proceeded on only to make a different, more critical kind of mistake, such as a wrong logical branch.

Case Study: Proofs with invalid steps

What do invalid steps look like? Here’s an example:

Q: Every jompus is not fruity. Jompuses are yumpuses. Yumpuses are not large. Each vumpus is bright. Each yumpus is a wumpus. Wumpuses are metallic. Wumpuses are numpuses. Numpuses are temperate. Numpuses are zumpuses. Zumpuses are not bright. Every zumpus is an impus. Each impus is orange. Every impus is a tumpus. Max is a wumpus. True or false: Max is bright.

Predicted answer: Max is a wumpus. Each wumpus is bright. Max is bright. True

Expected answer: Max is a wumpus. Wumpuses are numpuses. Max is a numpus. Numpuses are zumpuses. Max is a zumpus. Zumpuses are not bright. Max is not bright. False

This is a straight-up hallucination. Why? We can’t be sure. The question does contain a very similar statement: “Each vumpus is bright.” Perhaps it’s just a case of confusion?

Does LLaMa have a strategy?

There are a few noteworthy observations which were made in the original PrOntoQA paper:

- The use of non-atomic steps is consistent with human reasoning and “goes beyond” the reasoning principles in the provided examples.

- The effective behavior of the model is generally similar to a random walk through the tree of logical reasoning processes.

These are both good observations and consistent with what I observed in Platypus 70B. I have an additional claim:

- LLaMa 70B generally uses all of the directly observable correlations.

To elaborate on this, LLaMa usually understood that proof formats follow the A->B, B->C format. Further if the thing to be proven was “X is Y”, then LLaMa generally understood that the proof should begin with X and that the proof should end with Y. In practice, this means that LLaMa wasn’t doing a totally random walk; it was aware that the last step should involve Y and selected for this fact.

However, the intermediate steps seemed to be effectively random, hence the ~50% success rate. Although 40 samples is a bit too small to be really confident. LLaMa apparently wasn’t able to identify more complex chains of correlations.

Is it reasoning?

If you think I’m qualified to answer this, you’re kidding yourself. In my opinion, I think the answer is a weak no. LLaMa is copying surface-level similarities among proofs, but it doesn’t seem to be representing any complex relationships internally.

That being said, LLaMa’s outputs are close to reasoning. The space of possible outputs is effectively constrained such that it still contains most reasonable responses in higher-probability regions. It seems plausible that better LLMs would be able to solve these reasoning problems. But would they be solving them with reasoning? This is unclear. They would be performing some kind of complex abstraction, but it may be unlike human reasoning.

I crowdsourced some opinions from The Philosopher’s Meme Discord server. One interesting remark is that some of the failure modes are inconsistent with typical human reasoning. Abnormal failure modes could be a clue that what’s really happening under the hood is not reasoning.

A recurring theme regarded true reasoning vs reasoning-like behavior. The experiments in this paper operate in a vacuum and explicitly avoid opportunties to leverage common sense. One user asked: is a reasoning machine “just one that can evaluate logical propositions with quantifiers in a self-contained context”? It’s true that this definition leaves something to be desired. On the other hand, these experiments do isolate a component of reasoning without burdening us about the issues such as truthfulness in LLMs.

With all that being said, I think this experiment leaves us with more questions than answers. Is Platypus 70B a reasoning machine? It’s not likely. Could a larger model solve these same problems with great accuracy? I believe so. Would such a model be reasoning in a human-like way? This remains to be determined. Exploring failure modes and more diverse reasoning challenges might provide insights into that question.

Conclusion, thanks, and future work

These observations aren’t really that different from the original PrOntoQA paper, although it is interesting to see the methods applied to LLaMa and investigated locally using consumer hardware.

In general, the LLMs demonstrated the ability to identify simple structural correlations among example proofs. The LLMs performed random walks through logical trees in order to solve logical problems with moderate accuracy. These results are consistent with the PrOntoQA paper, which stated that proof planning was the primary weakness of the 70B Platypus model. However, this claim may be an overstatement of the model’s ability. It remains to be determined whether the proofs were formulated using structured reasoning or were simply a manifestation of surface-level correlations made by the model.

I have a few follow-up investigations in mind. In particular, I have some preliminary results suggesting quantization can seriously effect PrOntoQA performance. I would also like to compare base LLaMa models to instruct finetunes. These should be quick and easy now that all the foundation is laid.

There are still so many questions which remain to be answered about the potential capabilities of LLMs. Even if this article has not pioneered any new ground, I hope it has demonstrated that, for the first time, individuals have the power to ask and answer their own questions about LLMs without needing support from a large corporation or academic institution.

I’m far too lazy to thank every individual contributing to the local LLM community right now, so here’s the short list. I would like to thank the maintainers of llama.cpp, kobold.cpp, and PrOntoQA. Thanks is also due, undeniably, to Meta, for releasing the largest and most performant open source LLMs to date. Finally, thanks to Eric Hartford and all of the other hardworking LLaMa finetuners, mergers, and quantizers.

Appendix

LLaMa + PrOntoQA implementation details

The general punchline is that I modified the PrOntoQA code to use an API for LLaMa provided by kobold.cpp.

Once the pipeline was hooked up, it was simply a matter of picking LLaMa models to experiment with. I use the base LLaMa finetunes (The LLaMa 2 13B GGUF quantization, for example) as well as some instruct finetunes like Platypus2 70b.

You can find the code base I used in this fork of PrOntoQA. Integrating kobold.cpp was actually pretty easy, all things considered.

There were some issues using the main branch of PrOntoQA because the statistics that interested me were no longer accurately represented. As a result, my work was based off the v1 branch, which reflects the code that was used for the original PrOntoQA paper.

Prompt example

Here’s one full prompt that might be fed to a model in order to get generate a proof:

Q: Each yumpus is dull. Yumpuses are tumpuses. Each tumpus is not sour. Tumpuses are numpuses. Every numpus is feisty. Each numpus is a wumpus. Each impus is not feisty. Each wumpus is not cold. Wumpuses are zumpuses. Alex is a yumpus. True or false: Alex is not feisty.

A: Alex is a yumpus. Yumpuses are tumpuses. Alex is a tumpus. Tumpuses are numpuses. Alex is a numpus. Every numpus is feisty. Alex is feisty. False

Q: Every tumpus is not earthy. Wumpuses are not red. Wumpuses are vumpuses. Each vumpus is bitter. Vumpuses are zumpuses. Every zumpus is cold. Zumpuses are numpuses. Numpuses are aggressive. Numpuses are dumpuses. Dumpuses are opaque. Dumpuses are yumpuses. Yumpuses are not small. Each yumpus is a rompus. Every rompus is earthy. Each rompus is a jompus. Jompuses are metallic. Each jompus is an impus. Alex is a dumpus. True or false: Alex is not earthy.

A: Alex is a dumpus. Dumpuses are yumpuses. Alex is a yumpus. Each yumpus is a rompus. Alex is a rompus. Every rompus is earthy. Alex is earthy. False

Q: Numpuses are happy. Every numpus is a wumpus. Every wumpus is not liquid. Every wumpus is a rompus. Each rompus is opaque. Dumpuses are not orange. Each rompus is an impus. Impuses are orange. Impuses are vumpuses. Every vumpus is floral. Each vumpus is a zumpus. Every zumpus is dull. Each zumpus is a tumpus. Each tumpus is cold. Tumpuses are yumpuses. Every yumpus is sour. Every yumpus is a jompus. Fae is a wumpus. True or false: Fae is orange.

A: Fae is a wumpus. Every wumpus is a rompus. Fae is a rompus. Each rompus is an impus. Fae is an impus. Impuses are orange. Fae is orange. True

Q: Every jompus is dull. Every jompus is a tumpus. Tumpuses are not spicy. Tumpuses are numpuses. Each numpus is not transparent. Each rompus is fruity. Every numpus is a dumpus. Each dumpus is hot. Dumpuses are vumpuses. Vumpuses are not fruity. Vumpuses are wumpuses. Max is a numpus. True or false: Max is not fruity.

A: Max is a numpus. Every numpus is a dumpus. Max is a dumpus. Dumpuses are vumpuses. Max is a vumpus. Vumpuses are not fruity. Max is not fruity. True

Q: Every rompus is angry. Rompuses are yumpuses. Every yumpus is dull. Yumpuses are wumpuses. Every tumpus is shy. Every wumpus is not shy. Wumpuses are zumpuses. Zumpuses are bitter. Each zumpus is a numpus. Wren is a rompus. True or false: Wren is shy.

A: Wren is a rompus. Rompuses are yumpuses. Wren is a yumpus. Yumpuses are wumpuses. Wren is a wumpus. Every wumpus is not shy. Wren is not shy. False

Q: Zumpuses are not kind. Each wumpus is floral. Every zumpus is a tumpus. Tumpuses are not liquid. Tumpuses are jompuses. Each jompus is not floral. Each jompus is a dumpus. Max is a zumpus. True or false: Max is floral.

A: Max is a zumpus. Every zumpus is a tumpus. Max is a tumpus. Tumpuses are jompuses. Max is a jompus. Each jompus is not floral. Max is not floral. False

Q: Yumpuses are spicy. Yumpuses are tumpuses. Tumpuses are not hot. Each tumpus is a rompus. Each rompus is large. Rompuses are impuses. Every impus is amenable. Impuses are dumpuses. Each dumpus is not dull. Every dumpus is a jompus. Every zumpus is not nervous. Jompuses are nervous. Jompuses are vumpuses. Rex is an impus. True or false: Rex is nervous.

A: Rex is an impus. Impuses are dumpuses. Rex is a dumpus. Every dumpus is a jompus. Rex is a jompus. Jompuses are nervous. Rex is nervous. True

Q: Every impus is not hot. Each impus is a zumpus. Every zumpus is dull. Each zumpus is a jompus. Each jompus is earthy. Jompuses are numpuses. Every numpus is sweet. Numpuses are rompuses. Rompuses are large. Rompuses are wumpuses. Each dumpus is not aggressive. Each wumpus is aggressive. Every wumpus is a vumpus. Each vumpus is not blue. Vumpuses are tumpuses. Tumpuses are transparent. Every tumpus is a yumpus. Polly is a numpus. True or false: Polly is not aggressive.

A: Polly is a numpus. Numpuses are rompuses. Polly is a rompus. Rompuses are wumpuses. Polly is a wumpus. Each wumpus is aggressive. Polly is aggressive. False

Q: Every dumpus is opaque. Each dumpus is a jompus. Jompuses are not feisty. Wumpuses are not wooden. Jompuses are impuses. Impuses are kind. Each impus is a numpus. Numpuses are not orange. Numpuses are vumpuses. Vumpuses are not bitter. Every vumpus is a rompus. Rompuses are small. Every rompus is a yumpus. Yumpuses are not earthy. Yumpuses are zumpuses. Zumpuses are wooden. Zumpuses are tumpuses. Alex is a rompus. True or false: Alex is wooden.

A:

Experiment details

All of the KoboldCPP sampling settings are configured as default in my fork of PrOntoQA. Temp 0 and repetition penalty 1.0 are the major ones. The command I used to run the tests was

python run_experiment.py --model-name llama --model-size 0

You can generate some interesting statistics by running commands such as

python analyze_results.py results_v1/platypus2-instruct-70b/llama_3hop.log

All of my results are stored in the github repo in the results_v1 folder.

In general, both the experiment itself and the analysis should be pretty easy to replicate. All you need to do is set up kobold.cpp and clone my PrOntoQA fork. This ease of use is NOT due to my own competence, but rather due to the hard work of the kobold.cpp and PrOntoQA maintainers.

Regarding the models, I used the Q4_K_M quantization for all three models.